Database Performance: The Hidden Engine of Competitive Advantage

5 min read

•

5 months ago

In today's digital economy, milliseconds matter — and not in abstract ways. Every additional millisecond of application response time erodes user satisfaction, increases abandonment rates, and compounds operational inefficiency.

Research consistently shows that customer patience is measured not in seconds, but in fractions of a second. According to Google's research, 53% of mobile users abandon a site if it takes longer than three seconds to load, and most abandonment decisions happen within the first 500 milliseconds.

In e-commerce, a study by Akamai found that a 100-millisecond delay in website load time can result in a 7% reduction in conversion rates.

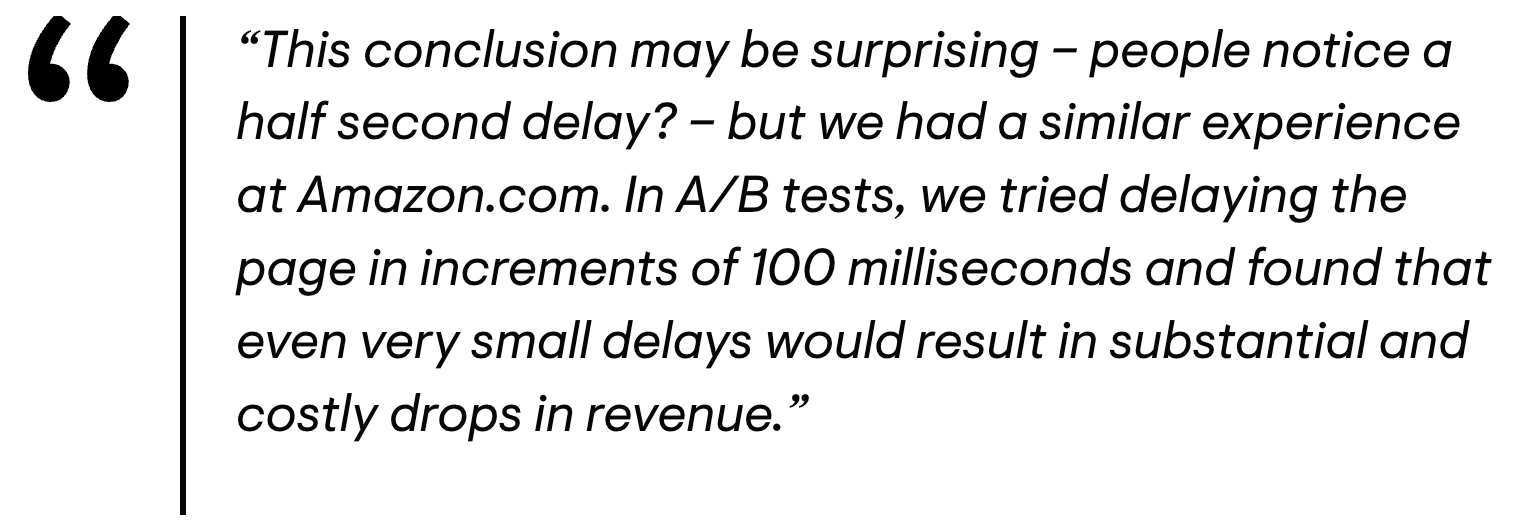

At Amazon, performance was treated as a direct business lever. According to Greg Linden, a former Amazon engineer, internal A/B tests showed that every additional 100 milliseconds of latency resulted in a measurable and costly drop in revenue. This insight has guided Amazon’s performance obsession ever since and remains one of the most evident proofs that speed and revenue are tightly correlated.

But the impact goes even deeper internally. Application slowness forces engineering teams to overcompensate: adding complex caching layers, scaling hardware vertically, and introducing brittle workarounds. All of these responses increase system complexity, technical debt, and operational risk, while masking the root cause instead of solving it.

Infrastructure costs also quietly inflate. In cloud environments, inefficient database access patterns result in more replicas, increased memory usage, and higher CPU requirements, often by orders of magnitude compared to optimized systems. The impact is usually hidden until bloated cloud bills arrive.

As Google's Mobile Page Speed Benchmark Study further demonstrated, the probability of a mobile site visitor bouncing increases by 123% as page load time grows from one to ten seconds.

At scale, a few hundred extra milliseconds per query, multiplied by millions of queries per day — silently accumulates into lost dollars, lost customers, lost market position.

In a competitive environment where alternatives are just a click away, delivering fast and seamless digital experiences is no longer a differentiator — it has become a baseline expectation. And at the heart of every experience, fueling or throttling it, lies database performance.

The Real Cost of Database Latency

A slow database creates cascading consequences: When queries take longer to resolve, applications slow down. When applications slow down, customers abandon them. Small inefficiencies in data retrieval multiply into significant business losses over time.

Internally, sluggish data systems force engineers to overcompensate by adding manual caching, tuning queries reactively, and provisioning larger and more expensive cloud resources. These efforts create architectural complexity that not only drives up costs but also increases fragility.

If we extrapolate conservatively:

- For a SaaS company processing 10 million queries per day, a 200ms latency overhead can result in over 555 additional compute hours daily, often going unnoticed until the invoice arrives.

- For an e-commerce platform, a one-second delay can result in up to a 20% decrease in customer conversions (Google/SOASTA study).

- Across industries, IDC estimates that organizations lose between 20% and 30% of their annual revenue to operational inefficiencies, and database bottlenecks are often a significant contributor to this loss.

In short, database performance penalties are not just technical slowdowns. They are business erosions — slow, invisible, and devastating over time.

Scaling Smart: Why Traditional Solutions Fall Short

Common responses to database performance problems — such as adding replicas, building manual caching layers with tools like Redis or Memcached, or investing in larger machines — are often necessary but deeply inefficient.

These remedies address symptoms, not the underlying causes.

Scaling read replicas increases costs linearly but doesn’t eliminate the underlying latency.Manual caching — often built on top of Redis or Memcached — introduces inconsistency risks, invalidation challenges, maintenance complexity, and architectural fragility, especially as applications grow and data freshness becomes critical.

These layers often require careful synchronization logic, manual expiry management, and constant tuning to avoid serving stale or incorrect data. Over time, the operational burden of maintaining these caching systems compounds, diverting engineering focus away from innovation and toward firefighting.

Scaling up — investing in larger and more powerful machines — is a costly and fundamentally limited strategy: hardware improvements offer diminishing returns, physical constraints are inevitable, and costs escalate dramatically compared to optimizing software architectures.

Reactive query tuning addresses hotspots but does not systematically improve system-wide efficiency.

This is where incremental materialization technologies, like Readyset, change the equation.

Incremental View Maintenance (IVM) — the foundation behind this approach — has been the subject of extensive academic research by institutions such as MIT, Stanford, and Harvard, confirming that IVM is the present and future of efficient data processing for modern, high-scale applications.

These studies have demonstrated that rethinking query execution through incrementally updated materializations can significantly reduce database load, improve latency, and enable the development of scalable real-time applications.

By intelligently caching query results and incrementally updating them in response to changes in the underlying database, Readyset enables applications to serve even complex analytical queries with sub-millisecond latency, without requiring changes to application code, compromising consistency, or burdening developers.

Readyset customers have reported:

- Up to 90% reduction in query response times.

- 70–80% reduction in primary database read load.

- Material cost savings by avoiding replica sprawl and cloud overprovisioning.

These results are not hypothetical optimizations. They are strategic multipliers that change how companies grow, scale, and innovate.

Database Performance Is a Boardroom Issue

Database performance is no longer a concern reserved for backend engineering teams.

It is a strategic concern that belongs on every C-level agenda because it impacts:

- Revenue acceleration: Faster experiences lead to improved conversion rates, increased customer retention, and enhanced brand loyalty.

- Cost containment: Efficient database systems reduce infrastructure and operational expenditure.

- Innovation velocity: Less time spent firefighting performance issues frees engineers to build the future, not patch the present.

- Operational resilience: Well-architected data access paths reduce the risk of outages and incidents during scaling events.

Organizations that treat database performance seriously scale faster, innovate more confidently, and serve customers more effectively.

Those who postpone or underestimate it pay the price — in dollars, reputation, and lost opportunities.

Moving Forward: From Reactive to Proactive

The message is clear:

- Investing early in database performance compounds value over time.Optimizing data access proactively is more cost-effective and intelligent than reacting to production emergencies.

- Leveraging modern materialization technologies, such as Readyset, transforms database performance from a risk into a competitive advantage.

In a world where milliseconds make millions, ensuring database performance is not optional; it's a strategic mindset.

Authors