Collation improvements in Readyset

6 min read

•

4 months ago

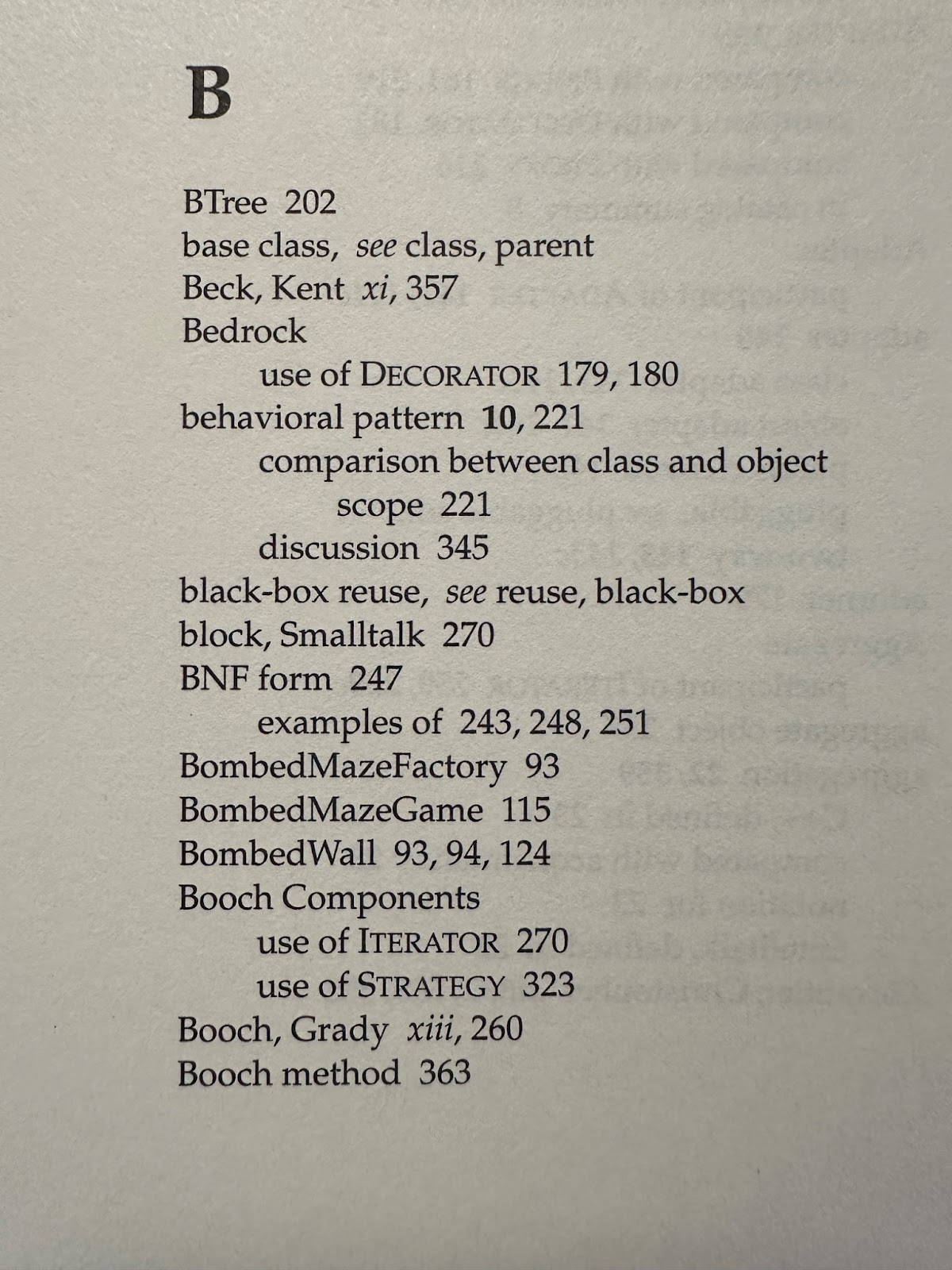

How often do you think about alphabetization? Software engineers don’t typically think a lot about it. How hard can it be to sort 26 letters, or maybe twice as many if you count lower and upper case? But examination of real world alphabetization quickly reveals that the problem is not trivial. Here’s a photograph of part of the index at the end of the Gang of Four’s book Design Patterns.

Right away, you can see that the cases are all mixed up. The lowercase Bs are mixed in with the capital Bs even though in ASCII or Unicode, capital letters sort numerically lower than lowercase letters. If these entries had been naïvely sorted by computer, you’d expect all the capital entries first, then the lowercase ones. And what about the first entry, “BTree,” which is sorted lower than “base”? “BTree” appears to have been sorted like “B-Tree” even though the hyphen isn’t printed.

Clearly something complicated happened when this index was produced, but these problems are just the tip of the iceberg.

What goes into a letter? Characters have a lot of attributes, and depending on your language and your context and personal preference, you may consider a greater or lesser number of them significant. The main attributes are:

- Base letter (A vs B vs C)

- Accents (e vs é vs è vs ë)

- Case or form (upper vs lower case, Hiragana vs Katakana)

- Punctuation

Comparing based on the base letter is called a primary strength comparison. Considering accents is secondary strength, and considering upper vs lower case is tertiary strength.

Three or four different things to consider may not sound too bad to you, but that’s because you’re probably an English speaker. Once you leave the Anglosphere, things get a lot worse very quickly because of course languages don’t agree on how to sort. You may not know that:

- Speakers of the same language in different places don’t always agree.

E.g. in French, accent differences are examined left to right, so

“cote” < “coté” < “côte” < “côté”

But in Canadian French, accents are examined right to left, so

“cote” < “côte” < “coté” < “côté”

- Some languages have multiple sort orders (e.g. German).

- Some languages sort a few special letters differently (in Spanish, “nz” < “ñ” < “o”).

- Some languages have “letters” composed of multiple letters (in Czech, “cz” < “ch”).

- Some languages sort single letters as multiple letters (in German, “ä” = “ae”, “ß” = “ss”).

- Korean is a special case because it sorts syllable by syllable, not letter by letter.

Collations in Readyset

Previously, Readyset did not handle most of these collation-related issues and had only two simple collations. The first relied on Rust’s native string comparison, which simply sorts strings by comparing their bytes lexicographically, which in effect sorts them by codepoint. This sorting did not match the MySQL default, which is accent-insensitive, case-insensitive. We also had a case-insensitive collation of uncertain correctness which unfortunately copied strings to compare them.

How to implement new collations is a question that is related to some unfortunate facts with respect to MySQL. The standard collection of libraries for performing collation is ICU (International Components for Unicode), which has a variant called ICU4C for C and C++ code, but when MySQL implemented Unicode collations, the developers decided not to use the ICU libraries. Instead, they implemented Unicode collations from scratch, but in a way that didn’t comply with the Unicode Collation Algorithm. This implementation then proceeded to evolve a couple of times over the years (leaving MySQL with these old implementations as legacy collations), but even today, decades later, the default collation still doesn't match Unicode or ICU.

One way they currently don’t match is that MySQL doesn’t handle contractions of multiple characters into a single character, as in the case of combining accents. You may not be aware that a string that displays as “é” can be encoded two ways: via a precomposed letter-E-plus-acute-accent character (encoded in UTF-8 as two bytes) or via the letter E plus a separate combining accent that applies to the previous character (a total of three bytes). According to Unicode, these two variations should compare as equal, but they are not equal in MySQL because, according to the documentation, “[a] combined character is considered different from the same character written with a single [U]nicode character in string comparisons”.

Plans to improve Readyset’s collation support

We wanted to rework our collations to provide maximal value and correctness in the cases we expected our users to exercise. We decided not to reverse engineer the MySQL collations to emulate the full unusual behaviors of MySQL’s collations. Instead, we decided to use a collation library that is more correct in an absolute sense even though it might not exactly match MySQL in some corner cases.

The collation library we used is ICU4X. This library is a brand new implementation in Rust of the ICU libraries’ functionality, not a wrapper around ICU4C. But unfortunately, ICU4X was missing a critical piece of code that we needed: collation keys.

A collation key does for hashing what a collation does for sorting: it’s a sequence of bytes derived from a string such that the lexicographic ordering of two collation keys is the same as the collated order of the original strings. Collation keys can thus be used to generate a collation-aware hash key for a string, which we needed to be able to do in our caches. (If a string key should be case-insensitive, then “a” needs to hash to the same place as “A”.)

As an example, here are sort keys for several similar strings at three different strengths.

Note that all three sort keys are the same when generated at primary strength, but when accents are considered at secondary strength, we get two different keys, and when case is additionally considered at tertiary strength, all four keys are different.

ICU considers some characters more similar than you may expect. For example, if we compare a normal S to the old fashioned long S form, we get:

Thus long S is an accent-level (secondary) difference from a normal S.

If we pop into Germany briefly, we might compare “ss” to “ß”. German considers these another example of a secondary level difference.

A wending into an open source project

Although the ICU4X project had an open issue on Github describing their desire to some day add collation key support, no one had gotten around to it yet. The recommended tack was to do a line-by-line port of about 500 lines of C++ code in ICU4C into Rust. After some examination of the code, I decided that I could do it, and Readyset confirmed that we were okay making the open source contribution.

It took about one week to translate the code, write a few tests, and conduct my own pre-review audit. Upon posting a pull request, however, I learned that the algorithm required 256 bits of per-collation data in ICU4C that were not yet present in ICU4X. One of the other project members took care of importing those bits, which required changes to both ICU4C and ICU4X and took about two weeks. Meanwhile, the ICU4X review process progressed. Because the change was somewhat significant in size, it took about four weeks for the review to conclude. But at long last, ICU4X can now generate collation keys!

At long last, better collation support in Readyset

Finally, I was able to switch Readyset over to new collations powered by ICU4X. The new collations should match MySQL most of the time. The only inconsistencies are related to comparisons between different scripts. The new collations, however, are significantly more accurate than before and should produce sorted, collated output that matches MySQL for expected use cases.

Epilogue

But our open source contributions weren’t done. After the initial collation key code was accepted, I first submitted a few minor cleanups. Then, shortly after we started using ICU4X in our product, we found a minor bug in the code we had contributed. So I submitted a small patch to fix the problems we’d uncovered.

Then a few weeks further on, someone in the ICU4X project found a conformance problem in the main part of the ICU4X collation code. The problem involved the highly special codepoint U+FFFE, which is supposed to sort lower than any other codepoint, even U+0000. Neither the collator nor the new collation key code handled that codepoint correctly, so the ICU4X maintainers alerted me. The good news was that the problem only affected collation keys generated at the identical level, a special collation strength that checks for minor bit-level encoding differences between strings that are otherwise equal. Since the problem would clearly be fixed by porting another 100 lines of C++ code related to identical level collation keys from ICU4C, I spent a few hours doing that, and I submitted that additional code to ICU4X, which the project accepted.

Authors