The Role of CAP Theorem in Modern Day Distributed Systems

7 min read

•

almost 2 years ago

Twenty years after its debut, what is the role of the CAP theorem in modern-day distributed systems?

Stumbling across the CAP theorem is like walking into a discussion (or a debate) already in progress. Imagine how others might feel walking into an argument amongst developers about which IDE is the best or which code editor is most productive. It’s hard to get your bearings, much less figure out what to think yourself.

Henry Robinson, Senior Staff Software Engineer at Slack, put it well, writing, “No subject appears to be more controversial to distributed systems engineers than the oft-quoted, oft-misunderstood CAP theorem.” The trouble for developers, however, is that you can’t treat it like a technical detail you can otherwise breeze past.

Over the years, people have claimed to have “beaten” the CAP theorem, people have questioned its usefulness and its relevance, and still others have argued it’s more relevant than ever. And at the bottom of this discourse is a proof that makes the CAP theorem more than a merely abstract argument.

In other words, developers don’t have to be distributed systems engineers to be beholden to the CAP theorem.

In this article, we’ll walk you through the CAP theorem, explain the basics of why it works, and show how developers can put it into practice without needing to become distributed systems experts themselves.

The CAP Theorem: What It Is and Why It Matters

Like all great theorems and frameworks, the CAP theorem is an acronym. CAP stands for Consistency, Availability, and Partition tolerance. Once you know the acronym, the theory itself is pretty simple: A distributed system cannot simultaneously be consistent, available, and partition tolerant.

Before proceeding, there’s a little nuance to explain.

According to the theorem, availability means that “every request received by a non-failing node in the system must result in a response.” If a client sends a data change request, the server has to accept the change. Not every server has to be available, but there must be enough individual servers available in a cluster to handle the request.

According to the theorem, partition tolerance means that “the network will be allowed to lose arbitrarily many messages sent from one node to another.”

Consistency, sometimes the most confusing element, refers to “strong” consistency. According to the theorem, “any read operation that begins after a write operation completes must return that value, or the result of a later write operation.”

Don’t confuse this sense of consistency with the one in a related concept, ACID (atomicity, consistency, isolation, durability). In ACID, consistency refers to ensuring a database is consistent before and after a transaction via integrity constraints.

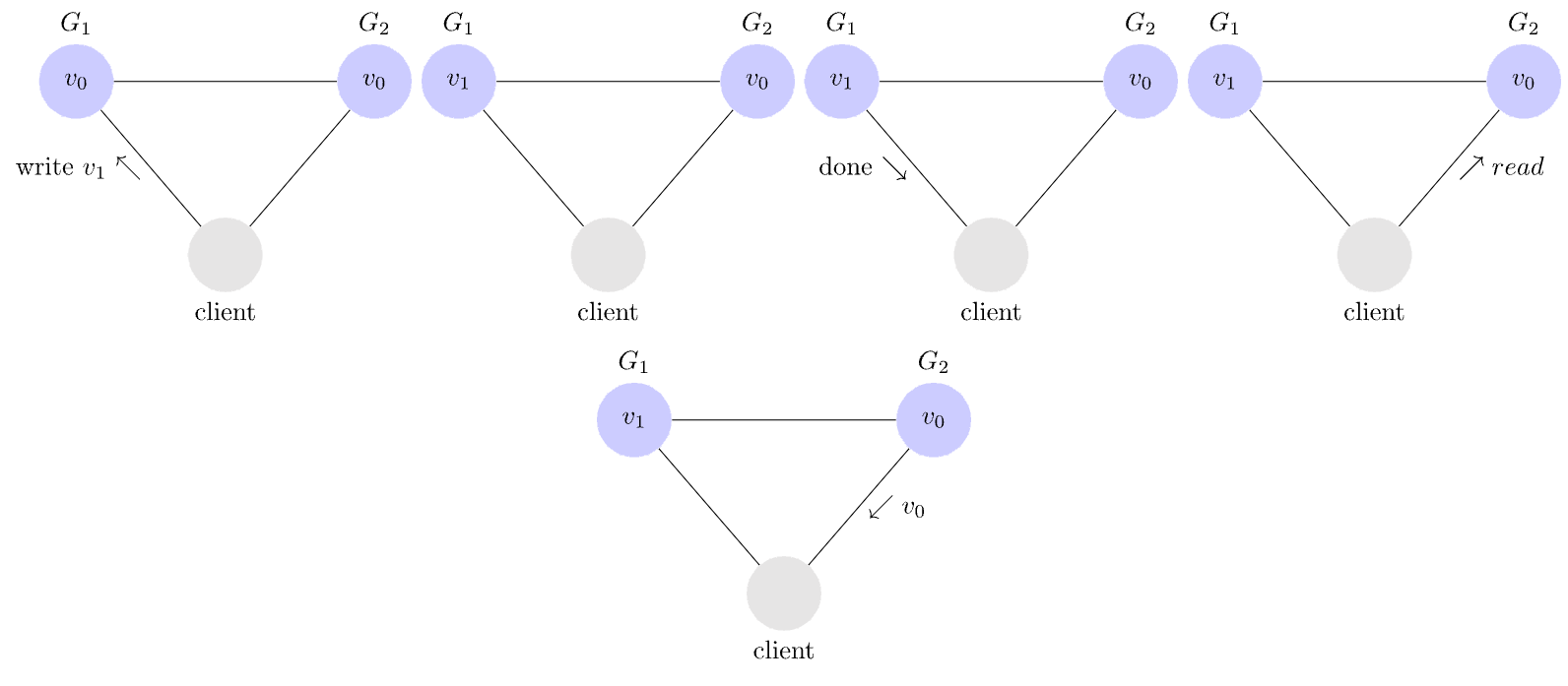

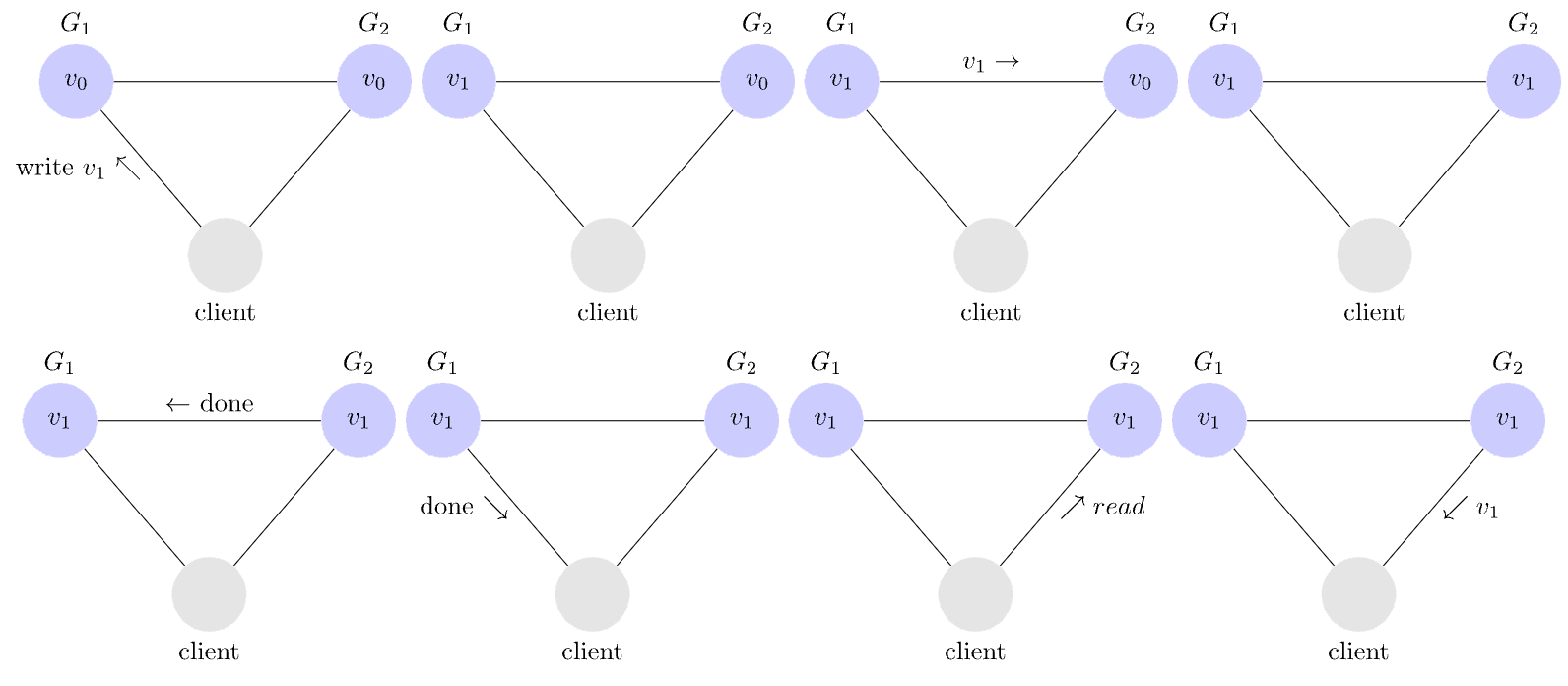

As Google engineer Michael Whittaker illustrates below, an inconsistent system involves a client getting stale data.

And a consistent system, as Whittaker illustrates again, is one where the client gets the most up-to-date data.

Eric Brewer, now vice-president of infrastructure at Google and a professor emeritus of computer science at the University of California, Berkeley, formulated the original theorem. In 2000, Brewer gave a keynote called “Towards Robust Distributed Systems” at the Principles of Distributed Computing conference.

In this keynote, he laid out the basic principles of the CAP theorem. Two years later, MIT researchers Seth Gilbert and Nancy Lynch proved the theorem in their paper “Brewer’s conjecture and the feasibility of consistent, available, partition-tolerant web services.” And in the years since, others have even provided handy illustrated guides to the proof.

According to Brewer, the original inspiration for the CAP theorem resulted from discussions in the mid-1990s about building “cluster-based wide-area systems,” including search engines, proxy caches, and content distribution systems. At the time, he writes, revenue goals and contract specifications ensured “system availability was at a premium.” The CAP theorem emerged due to facing this premium and hitting limits in availability or consistency.

Two decades later, the CAP theorem stands tall – whether as a monument or a target.

Consistency, Availability, and Partition Tolerance – You Can Only Pick Two (or Can You?)

The CAP theorem plays off a classic saying – “Fast, cheap, and good, but you can only pick two.” It’s an evocative way to explain tradeoffs. Just as an artist can’t turn around a beautiful portrait without a high price tag or a long timeline, distributed systems face limits after being partitioned and made to be both consistent and available.

In reality, however, the triplicate model doesn’t quite match. The inherent instability of networks makes partitioning necessary, meaning you have to choose between consistency and availability.

One of the biggest mistakes in distributed computing is the assumption that networks can be fully reliable (the first of the eight fallacies of distributed computing described by Sun Microsystems). As Robert Greiner writes, “Networks and parts of networks go down frequently and unexpectedly. Network failures happen to your system and you don't get to choose when they occur.”

Distributed systems have to tolerate partitions. That third of the CAP theorem is chosen ahead of time, leaving you only two dials to turn: Consistency and Availability.

The choice between consistency and availability can be broad or narrow, expanding to entire systems and products or narrowed to particular use cases and functions.

Some companies choose to be either AP or CP, meaning they prioritize availability over consistency (or vice versa). Cockroach Labs, for example, “chooses consistency, and is therefore a CP system in the terminology of the CAP theorem (as opposed to an AP system, which favors availability over consistency).”

Other examples include ZooKeeper and Facebook, which both relax availability and Amazon Dynamo and Yahoo’s PNUTS system, which both relax consistency.

For many systems, however, CP vs. AP is not a binary decision one needs to make at the outset and then bake into the system. Many systems are dynamic and can be configured in a CP or AP style depending on different use cases and requests. Every implementation takes place somewhere on the spectrum between the two.

Daniel J. Abadi, then computer science professor at Yale University, even rewrote CAP as PACELC to illustrate this spectrum: “If there is a partition (P), how does the system trade off availability and consistency (A and C); else (E), when the system is running normally in the absence of partitions, how does the system trade off latency (L) and consistency (C)?”

Brewer himself points out this nuance, writing, “The choice between C and A can occur many times within the same system at very fine granularity; not only can subsystems make different choices, but the choice can change according to the operation or even the specific data or user involved.”

In some contexts, the binary nature of this choice recedes even further. At Figma, for example, the tradeoffs are “relatively subtle.”

“Anyone who's looked into CAP theorem discovers that tradeoffs aren’t as scary as they first seem,” writes Figma CTO Kris Rasmussen. “They result in important but relatively subtle trade-offs most people don't notice. In our case, we took a tradeoff on availability: if the application process on the server were to go down, clients are temporarily unable to load a file. These failures happen so rarely, and we’re now able to resolve them so quickly that it’s unlikely you’ll even notice.”

This subtlety deepens when you realize, as Brewer writes, that these properties are more continuous than strictly binary. “Availability is obviously continuous from 0 to 100 percent,” he writes, “but there are also many levels of consistency, and even partitions have nuances, including disagreement within the system about whether a partition exists.”

How Developers Can Use the CAP Theorem

At first glance, the CAP theorem looks too complex or too simple to be useful to many developers.

If you don’t know the acronym and its nuances, it’ll feel awkward to reference and unwieldy. But once you do know the basics, your knowledge can appear misleadingly superficial. As soon as you learn what C, A, and P stand for, after all, you learn it’s not quite as simple as picking two of them.

For developers unfamiliar with distributed systems engineering, however, the CAP theorem provides a way into the discussion around distributed systems and a lens through which to make informed technical decisions. With just a little more understanding, developers can gain a new way of making technical decisions in distributed systems contexts.

As Brewer wrote twelve years after its debut, the key is to remember, “There is an incredible range of flexibility for handling partitions and recovering from them.” Therefore, he continues, “The modern CAP goal should be to maximize combinations of consistency and availability that make sense for the specific application.” As you do so, think about how you can plan for how your system will operate during a partition and how it will recover afterward.

In this way of thinking, the CAP theorem is less of an absolute law from on high and more like a lens through which to weigh the tradeoffs of different decisions in complex systems.

“This expression of CAP served its purpose,” Brewer writes, “which was to open the minds of designers to a wider range of systems and tradeoffs.” Similarly, developers can use the CAP theorem to think through priorities and their consequences.

Developers can let the theorem map the decision set available, and by learning from how others have navigated this matrix, they can predict which choices will have the most optimal effects. As Rasmussen writes, “In practice, we expect the window in which things could go wrong to be very small. Even if a server crashes, we expect customers to not lose much work. This is a tradeoff we have to make.”

Here, theory and practice align: There are tradeoffs you have to make. Rasmussen writes, “The challenge for us and any other engineering team is to find the right set of trade-offs for our use case.”

Don’t Let Mental Models Become Blinders

The CAP theorem is extremely useful – both for experienced distributed systems engineers and developers just barely building familiarity in the field – but it can be limiting if you totalize it.

Ben Darnell, CTO at Cockroach Labs, puts it well, writing, “The CAP theorem focuses on a single narrow tradeoff between consistency and availability, but this doesn’t cover all the causes of unavailability or solutions to unavailability.”

The CAP theorem is a lens that, used well, helps you see more clearly than you could otherwise. With the CAP theorem and its nuances in mind, developers can better parse distributed systems' complexity and consider the advantages and disadvantages of their technical decisions.

Readyset Is an AP system

Readyset augments source-of-truth relational databases in mission-critical user-facing applications. Given its position in the stack, availability (and low latencies) trump consistency: Readyset speeds up all database reads that can tolerate relaxed consistency while still ensuring that developers have a straightforward path to service other reads that require stronger forms of consistency.

Authors